一直以来想学下做词云,听说好多R包都能实现,所以就在网上搜了下,并将一个QQ生信交流群的近5个月的聊天记录下载下来,尝试的做了个词云,看看大家都是在讨论啥。。。 1. 先从手机QQ上下载聊天记录,是一个.txt的文件

用R读入.txt文件,因为该文件是纯汉字形式,所以需要设定

encoding = "UTF-8"data <- read.table(file = "qqchat.txt", sep = "\t", fill = T, encoding = "UTF-8", skip = 3)接下来加载各种R包

library(rJava) library(Rwordseg) library(RColorBrewer) library(tm) library(wordcloud) library(wordcloud2)rJava和Rwordseg是用来分词的,后者必须在前者正确安装后才能安装。

但是有时前者安装后,后者使用下面安装命令还是会报错

install.packages("Rwordseg", repos = "http://R-Forge.R-project.org", type = "source")这是就需要手动进行安装了,先从https://r-forge.r-project.org/R/?group_id=1054下载Rwordseg包的zip安装包(我是windows),然后用Rsudio tools的install packages工具进行安装,很顺利的安装成功

wordcloud和wordcloud2包则是用来画词云图的

将聊天记录的数据进行预处理(其实就是过滤掉一些不重要的词),并进行分词

##去除表情和图片等文字以及日期 data_clean <- subset(data, !grepl("^2016|2017|[图片]|[表情]", data$V1)) data_clean_vector <- as.character(data_clean$V1) ##分词 words <- segmentCN(data_clean_vector) ##去除数字开头的词 words <- unlist(lapply(words, function(x){ tmp <- gsub("\\d", "", x) })) ##去除只有单个文字的词 words <- subset(words, nchar(as.character(words)) > 1) ##查看下分词后的结果 words_freq <- table(words) words_freq <- words_freq[order(words_freq, decreasing = T)] head(words_freq, 10)去除掉停用词,这个我也是在网上看到的,有个网站收录了各种语言的停用词http://m.blog.csdn.net/xiao_jun_0820/article/details/50381155,所以我就把中文的停用词下载下来,然后复制到R的一个向量中,然后用tm包的

removeWords函数即可去除stop_words <- c("、","。","〈","〉","《","》","一","一切","一则","一方面","一旦","一来","一样","一般","七","万一","三","上下","不仅","不但","不光","不单","不只","不如","不怕","不惟","不成","不拘","不比","不然","不特","不独","不管","不论","不过","不问","与","与其","与否","与此同时","且","两者","个","临","为","为了","为什么","为何","为着","乃","乃至","么","之","之一","之所以","之类","乌乎","乎","乘","九","也","也好","也罢","了","二","于","于是","于是乎","云云","五","人家","什么","什么样","从","从而","他","他人","他们","以","以便","以免","以及","以至","以至于","以致","们","任","任何","任凭","似的","但","但是","何","何况","何处","何时","作为","你","你们","使得","例如","依","依照","俺","俺们","倘","倘使","倘或","倘然","倘若","借","假使","假如","假若","像","八","六","兮","关于","其","其一","其中","其二","其他","其余","其它","其次","具体地说","具体说来","再者","再说","冒","冲","况且","几","几时","凭","凭借","则","别","别的","别说","到","前后","前者","加之","即","即令","即使","即便","即或","即若","又","及","及其","及至","反之","反过来","反过来说","另","另一方面","另外","只是","只有","只要","只限","叫","叮咚","可","可以","可是","可见","各","各个","各位","各种","各自","同","同时","向","向着","吓","吗","否则","吧","吧哒","吱","呀","呃","呕","呗","呜","呜呼","呢","呵","呸","呼哧","咋","和","咚","咦","咱","咱们","咳","哇","哈","哈哈","哉","哎","哎呀","哎哟","哗","哟","哦","哩","哪","哪个","哪些","哪儿","哪天","哪年","哪怕","哪样","哪边","哪里","哼","哼唷","唉","啊","啐","啥","啦","啪达","喂","喏","喔唷","嗡嗡","嗬","嗯","嗳","嘎","嘎登","嘘","嘛","嘻","嘿","四","因","因为","因此","因而","固然","在","在下","地","多","多少","她","她们","如","如上所述","如何","如其","如果","如此","如若","宁","宁可","宁愿","宁肯","它","它们","对","对于","将","尔后","尚且","就","就是","就是说","尽","尽管","岂但","己","并","并且","开外","开始","归","当","当着","彼","彼此","往","待","得","怎","怎么","怎么办","怎么样","怎样","总之","总的来看","总的来说","总的说来","总而言之","恰恰相反","您","慢说","我","我们","或","或是","或者","所","所以","打","把","抑或","拿","按","按照","换句话说","换言之","据","接着","故","故此","旁人","无宁","无论","既","既是","既然","时候","是","是的","替","有","有些","有关","有的","望","朝","朝着","本","本着","来","来着","极了","果然","果真","某","某个","某些","根据","正如","此","此外","此间","毋宁","每","每当","比","比如","比方","沿","沿着","漫说","焉","然则","然后","然而","照","照着","甚么","甚而","甚至","用","由","由于","由此可见","的","的话","相对而言","省得","着","着呢","矣","离","第","等","等等","管","紧接着","纵","纵令","纵使","纵然","经","经过","结果","给","继而","综上所述","罢了","者","而","而且","而况","而外","而已","而是","而言","能","腾","自","自个儿","自从","自各儿","自家","自己","自身","至","至于","若","若是","若非","莫若","虽","虽则","虽然","虽说","被","要","要不","要不是","要不然","要么","要是","让","论","设使","设若","该","诸位","谁","谁知","赶","起","起见","趁","趁着","越是","跟","较","较之","边","过","还是","还有","这","这个","这么","这么些","这么样","这么点儿","这些","这会儿","这儿","这就是说","这时","这样","这边","这里","进而","连","连同","通过","遵照","那","那个","那么","那么些","那么样","那些","那会儿","那儿","那时","那样","那边","那里","鄙人","鉴于","阿","除","除了","除此之外","除非","随","随着","零","非但","非徒","靠","顺","顺着","首先","︿","!","#","$","%","&","(",")","*","+",",","0","1","2","3","4","5","6","7","8","9",":",";","<",">","?","@","[","]","{","|","}","~","¥") words_pass <- removeWords(names(words_freq),stop_words)然后我先

head(words_pass,20),发现还有是有一些不太相关的词,所以手动去除了下words_clean_freq <- as.data.frame(words_freq[words_pass]) words_clean_freq <- words_clean_freq[!is.na(words_clean_freq$words),] words_clean_freq <- subset(words_clean_freq, !grepl("一|二|没", words_clean_freq$words))做完上面几步,就可以根据词以及对应的频率画图了,我先取了前500个频率最高的词,然后用wordcloud包的

wordcloud函数画图,如下,感觉好丑。。然后?wordcloud查看了函数的帮助,感觉可调空间也不大,果断网上再搜搜有无其他好看的词云包words_clean_freq_top <- head(words_clean_freq, 500) wordcloud(words_clean_freq_top$words,words_clean_freq_top$Freq, random.order=FALSE,random.color=FALSE,colors=c(1:50))

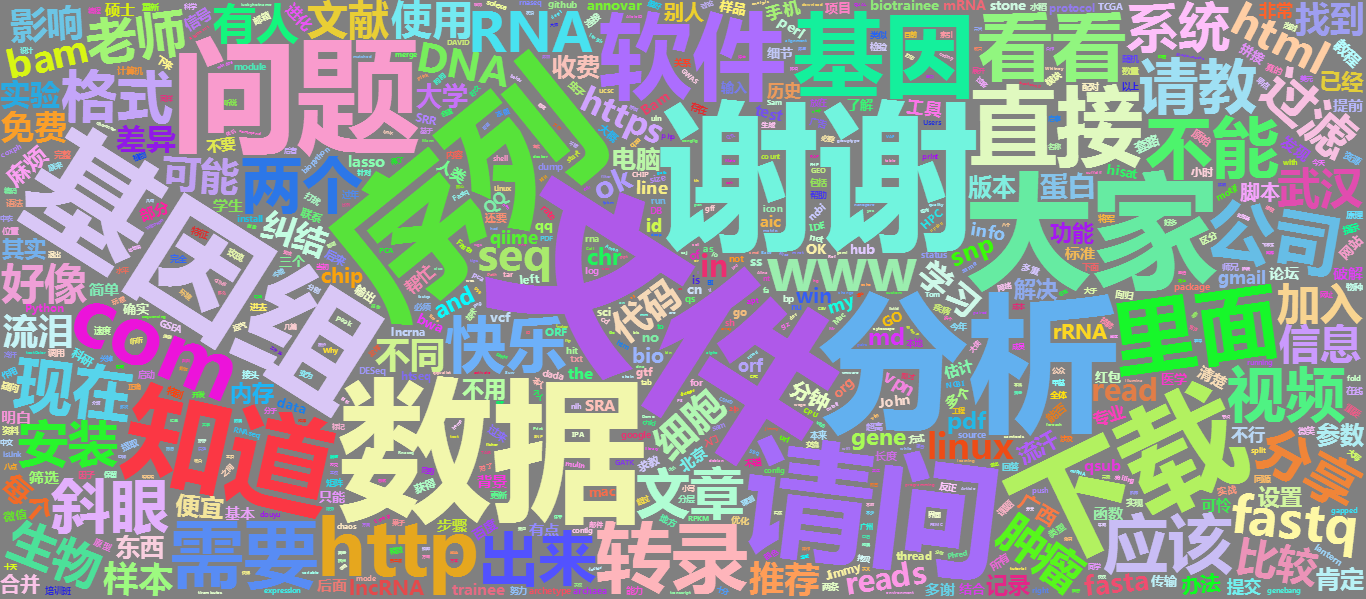

然后在[http://blog.csdn.net/sinat_26917383/article/details/51620019](http://blog.csdn.net/sinat_26917383/article/details/51620019)的帮助下选择了wordcloud2包,然后比较随意的挑了一个模板试了下,果然变得好看多了。。。

wordcloud2(words_clean_freq_top, size = 1, fontFamily = "微软雅黑",

color = "random-light", backgroundColor = "grey")

wordcloud2包的`wordcloud2`函数不仅能挑选字体外观以及自带图片形状,还能根据输入的个性化的图片来包装词云,感觉蛮好用的,而且还支持输出html格式(我是用Rstudio输出的),然后鼠标放置在html的某个词上,还能显示词出现的次数!- 词云画出来了,当然是看看大家都在讨论啥,作为生信分析交流群,分析这个词肯定出现次数前几位的,文件应该算是出现次数最高的词了,基因组果然还是应用范围最广的分析领域(生信而言),数据这次当然也必不可少,从谢谢两个字看出大家还是非常有礼貌的。

本文出自于http://www.bioinfo-scrounger.com转载请注明出处